My title is an expression you hear quite often, the exclamation mark denoting how surprising it seems when, for example, you walk into a shop and find yourself behind your friend in the queue (especially if you were just thinking about her), or if perhaps the person at the next desk in your office turns out to have the same birthday as you.

But by considering the laws of probability you can come to the conclusion that such things are less unlikely than they seem. Here’s a way of looking at it: suppose you use some method of generating random numbers, say between 0 and 100, and then plot them as marks on a scale. You’ll probably find blank areas in some parts of the scale, and tightly clustered clumps of marks in others. It’s sometimes naively assumed that, if the numbers are truly random, they should be evenly spread across the scale. But a simple argument shows this to be mistaken: there are in fact relatively few ways to arrange the marks evenly, but a myriad ways of distributing them irregularly. Therefore, by elementary probability, it is overwhelmingly likely that any random arrangement will be of the irregular and clumped sort.

To satisfy myself, I’ve just done this exercise – and to make it more visual I have generated the numbers as 100 pairs of dual coordinates, so that they are spread over a square. Already it looks gratifyingly clumpy, as probability theory predicts. So, to stretch and reapply the same idea, you could say it’s quite natural that contingent events in our lives aren’t all spaced out and disjointed from one another in a way that we might naively expect, but end up being apparently juxtaposed and connected in ways that seem surprising to us.

To satisfy myself, I’ve just done this exercise – and to make it more visual I have generated the numbers as 100 pairs of dual coordinates, so that they are spread over a square. Already it looks gratifyingly clumpy, as probability theory predicts. So, to stretch and reapply the same idea, you could say it’s quite natural that contingent events in our lives aren’t all spaced out and disjointed from one another in a way that we might naively expect, but end up being apparently juxtaposed and connected in ways that seem surprising to us.

Isaac Asimov, the science fiction writer, put it more crisply:

People are entirely too disbelieving of coincidence. They are far too ready to dismiss it and to build arcane structures of extremely rickety substance in order to avoid it. I, on the other hand, see coincidence everywhere as an inevitable consequence of the laws of probability, according to which having no unusual coincidence is far more unusual than any coincidence could possibly be. (From The Planet that Wasn’t, originally published in The Magazine of Fantasy and Science Fiction, May 1975)

All there is to it?

So there we have the standard case for reducing what may seem like outlandish and mysterious coincidences to the mere operation of random chance. I have to admit, however, that I’m not entirely convinced by it. I have repeatedly experienced coincidences in my own life, from the trivial to the really pretty surprising – in a moment I’ll describe some of them. What I have noticed is that they often don’t have the character of being just random pairs or clusters of simple happenings, as you might expect, but seem to be linked to one another in strange and apparently meaningful ways, or to associate themselves with significant life events. Is this a mere subjective illusion, or could there be some hidden, organising principle governing happenings in our lives?

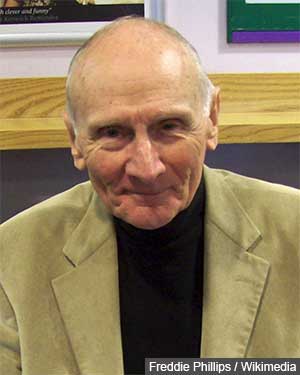

I don’t have an answer to that, but I’m certainly not the first to speculate about the question. This post was prompted by a book I recently read, Coincidence by Brian Inglis*. Inglis was a distinguished and well-liked journalist in the last century, having been a formative editor of The Spectator magazine and a prolific writer of articles and books. He was also a television presenter: those of a certain age may remember a long-running historical series on ITV, All Our Yesterdays, which Inglis presented. In addition, to the distaste of some, he wrote quite widely on paranormal phenomena.

The joker

In Coincidence he draws on earlier speculators about the topic, including the Austrian zoologist Paul Kammerer, who, after being suspected of scientific fraud in his research into amphibians, committed suicide in 1926. Kammerer was an enthusiastic collector of coincidence stories, and tried to provide a theoretical underpinning for them with his idea of ‘seriality’, which had some influence on Jung’s notion of synchronicity, in which meaning is placed alongside causality in its power to determine events. Kammerer also attracted the attention of Arthur Koestler, who figures in one of my previous posts. Koestler gave an account of the fraud case which was sympathetic to Kammerer, in The Case of the Midwife Toad. Koestler was also fascinated by coincidences and wrote about them in his book The Roots of Coincidence. Inglis, in his own book, recounts many accounts of surprising coincidences from ordinary lives. Many of his subjects have the feeling that there is some sort of capricious organising spirit behind these confluences of events, whom Inglis playfully personifies as ‘the joker’.

This putative joker certainly seems to have had a hand in my own life a number of times. Thinking of the subtitle of Inglis’ book (‘A Matter of Chance – or Synchronicity?‘) the latter seems to be a factor with me. I have been so struck by the apparent significance of some of my own coincidences that I have recorded quite a number of them. First, here’s a simple example which shows that ‘interlinking’ tendency which occurs so often. (Names are changed in the accounts that follow.)

My own stories

From about 35 years ago: I spend an evening with my friend Suzy. We talk for a while about our mutual acquaintance Robert, whom we have both lost touch with; neither of us have seen him for a couple of years. Two days later, I park my car in a crowded North London street and Robert walks past just as I get out of the car, and I have a conversation with him. And then, I subsequently discover, the next day Suzy meets him quite by chance on a railway station platform. I don’t know whether the odds against this could be calculated, but they would be pretty huge. Each of the meetings, so soon after the conversation, would be unlikely, especially in crowded inner London as they were. And the pair of coincidences show this strange interlinking that I mentioned. But I have more examples which are linked to one another in an even more elaborate way, as well as being attached to significant life events.

In 1982 I decided that, after nearly 14 years, it was time to leave the first company I had worked for long-term; let’s call it ‘company A’. During my time with them, a while before this, I’d shared a flat with a couple of colleagues for 5 years. At one stage we had a vacancy in the flat and advertised at work for a third tenant. A new employee of the company – we’ll call him Tony McAllister – quickly showed an interest. We felt a slight doubt about the rather pushy way he did this, pulling down our notice so that no one else would see it. But he seemed pleasant enough, and joined the flat. We should have listened to our doubts – he turned out to be definitely the most uncongenial person I have ever lived with. He consistently avoided helping with any of the housework and other tasks around the flat, and delighted in dismantling the engine of his car in the living room. There were other undesirable personal habits – I won’t trouble you with the details. Fortunately it wasn’t long before we all left the flat, for other reasons.

Back to 1982, and my search for a new job. A particularly interesting sounding opportunity came up, in a different area of work, with another large company – company B. I applied and got an interview with a man who would be my new boss if I got the job: we’ll call him Mark Cooper. He looked at my CV. “You worked at company A – did you know Tony McAllister? He’s one of my best friends.” Putting on my best glassy grin, I said that I did know him. And I did go on to get the job. Talking subsequently, we both eventually recalled that Mark had actually visited our flat once, very briefly, with Tony, and we’d met fleetingly. That would have been five years or so earlier.

About nine months into my work with company B I saw a job advertised in the paper while I was on the commuter train. I hadn’t been looking for a job, and the ad just happened to catch my eye as I turned the page. It was with a small company (company C), with requirements very relevant to what I was currently doing, and sounding really attractive – so I applied. While I was awaiting the outcome of this, I heard that my present employer, company B, was to stop investing in my current area of work, and I was moved to a different position. I didn’t like the new job at all, and so of course was pinning my hopes on the application I’d already made. However, oddly, the job I’d been given involved being relocated into a different building, and I was given an office with a window directly overlooking the building in which company C was based.

This seemed a good omen – and I subsequently was given an interview, and then a second one, with directors of company C. On the second one, my interviewer, ‘Tim Newcombe’, seemed vaguely familiar, but I couldn’t place him and thought no more of it. He evidently didn’t know me. Once again, I got the job: apparently it had been a close decision between me and one other applicant, from a field of about 50. And it wasn’t long before I found out why Tim seemed familiar: he was in fact married to someone I knew well in connection with some voluntary work I was involved with. On one occasion, I eventually realised, I had visited her house with some others and had very briefly met Tim. I went on to work for company C for nearly 12 years, until it disbanded. Subsequent to this both Tim and I worked on our own accounts, and we collaborated on a number of projects.

So far, therefore, two successive jobs where, for each, I was interviewed by someone whom I eventually realised I had already met briefly, and who had a strong connection to someone I knew. (In neither case was the connection related to the area of work, so that isn’t an explanation.)

The saga continues

A year or two after leaving company B, I heard that Mark Cooper had moved to a new job in company D, and in fact visited him there once in the line of work. Meanwhile, ten years after I had started the job in company C – and while I was still doing it – my wife and I, wanting to move to a new area, found and bought a house there (where we still live now, more than 20 years later). I then found out that the previous occupants were leaving because the father of the family had a new job – with, it turned out, company D. And on asking him more about it, it transpired that he was going to work with Mark Cooper, making an extraordinarily neat loop back to the original coincidence in the chain.

I’ve often mused on this striking series of connections, and wondered if I was fated always to encounter some bizarre coincidence every time I started new employment. However, after company C, I worked freelance for some years, and then got a job in a further company (my last before retirement). This time, there was no coincidence that I was aware of. But now, just in the last few weeks, that last job has become implicated in a further unlikely connection. This time it’s my son who has been looking for work. He told me about a promising opportunity he was going to apply for. I had a look at the company website and was surprised to see among the pictures of employees a man who had worked in the same office as me for the last four years or so – from the LinkedIn website I discovered he’d moved on a month after I retired. My son was offered an initial telephone interview – which (almost inevitably) turned out to be with this same man.

In gullible mode, I wondered to myself whether this was another significant coincidence. Well, whether I’m gullible or not, my son did go on to get the job. I hadn’t worked directly with the interviewer in question, and only knew him slightly; I don’t think he was aware of my surname, so I doubt that he realised the connection. My son certainly didn’t mention it, because he didn’t want to appear to be currying favour in any dubious way. And in fact this company that my son now works in turns out to have a historical connection with my last company – which perhaps explains the presence of his interviewer in it. But neither I nor my son were aware of any of this when he first became interested in the job.

Just one more

I’m going to try your patience with just one more of my own examples, and this involves the same son, but quite a few years back – in fact when he was due to be born. At the time our daughter was 2 years old, and if I was to attend the coming birth she would need to be babysat by someone. One friend, who we’ll call Molly, said she could do this if it was at the weekend – so we had to find someone else for a weekday birth. Another friend, Angela, volunteered. My wife finally started getting labour pains, a little overdue, one Friday evening. So it looked as if the baby would arrive over the weekend and Molly was alerted. However, untypically for a second baby, this turned out to be a protracted process. By Sunday the birth started to look imminent, and Molly took charge of my daughter. But by the evening the baby still hadn’t appeared – we had gone into hospital once but were sent home again to wait. So we needed to change plans, and my daughter was taken to Angela, where she would stay overnight.

My son was finally born in the early hours of Monday morning, which was May 8th. And then the coincidence: it turned out that both Molly and Angela had birthdays on May 8th. What’s nice about this one is that it is possible to calculate the odds. There is that often quoted statistic that if there are 23 or more people in a room there is a greater than evens chance that at least two of them will share the same birthday. 23 seems a low number – but I’ve been through the maths myself, and it is so. However in this case, it’s a much simpler calculation: the odds would be 1 in 365 x 365 (ignoring leap years for simplicity), which is 133,225 to 1 against. That’s unlikely enough – but once again, however, I don’t feel that the calculations tell the full story. The odds I’ve worked out apply where any three people are taken at random and found all to share the same birthday. In this case we have the coincidence clustered around a significant event, the actual day of birth of one of them – and that seems to me to add an extra dimension that can’t so easily be quantified.

Malicious streak

Well, there you have it – random chance, or some obscure organising principle beyond our current understanding? Needless to say, that’s speculation which splits opinion along the lines I described in my post about the ‘iPhobia’ concept. As an admitted ‘iclaustrophobe’, I prefer to keep an open mind on it. But to return to Brian Inglis’s ‘joker’: Inglis notes that this imagined character seems to display a malicious streak from time to time: he quotes an example where estranged lovers are brought together by coincidence in awkward, and ultimately disastrous circumstances. And add to that the observation of some of those looking into the coincidence phenomenon that their interest seems to attract further coincidences: when Arthur Koestler was writing about Kammerer he describes his life being suddenly beset by a “meteor shower” of coincidences, as if, he felt, Kammerer were emphasising his beliefs from beyond the grave.

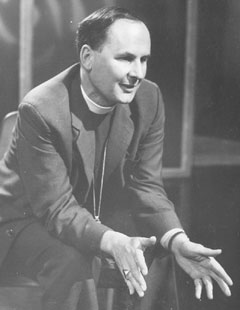

With both of those points in mind, I’d like to offer one further story. It was told to me by Jane O’Grady (real name this time), and I’m grateful to her for allowing me to include it here – and also for going to some trouble to confirm the details. Jane is a writer, philosopher and teacher. One day in late 1991, she and her then husband, philosopher Ted Honderich, gave a lunch to which they invited Brian Inglis. His book on coincidences – the one I’ve just read – had been published fairly recently, and a good part of their conversation was a discussion of that topic. A little over a year later, in early 1993, Jane was teaching a philosophy A-level class. After a half-time break, one of the students failed to reappear. His continuing absence meant that Jane had to give up waiting and carry on without him. He had shown himself to be somewhat unruly, and so this behaviour seemed to her at first to be irritatingly in character.

And so when he did finally appear, with the class nearly over, Jane wondered whether to believe his proffered excuse: he said he had witnessed a man collapsing in the street and had gone to help. But it turned out to be perfectly true. Unfortunately, despite his intervention, nothing could be done and the man had died. The coincidence, as you may have guessed, lay in the identity of the dead man. He was Brian Inglis.

*Brian Inglis, Coincidence: A Matter of Chance – or Synchronicity? Hutchinson, 1990