Commuting days until retirement: 108

After my last post, which, among other things, compared differing attitudes to death and its aftermath (or absence of one) on the part of Arthur Koestler and George Orwell, here’s another fruitful comparison. It seemed to arise by chance from my next two commuting books, and each of the two people I’m comparing, as before, has his own characteristic perspective on that matter. Unlike my previous pair both could loosely be called scientists, and in each case the attitude expressed has a specific and revealing relationship with the writer’s work and interests.

The Mathematician

The first writer, whose book I came across by chance, has been known chiefly for mathematical puzzles and games. Martin Gardner was born in Oklahoma USA in 1914; his father was an oil geologist, and it was a conventionally Christian household. Although not trained as a mathematician, and going into a career as a journalist and writer, Gardner developed a fascination with mathematical problems and puzzles which informed his career – hence the justification for his half of my title.

This interest continued to feed the constant books and articles he wrote, and he was eventually asked to write the Scientific American column Mathematical Games which ran from 1956 until the mid 1980s, and for which he became best known; his enthusiasm and sense of fun shines through the writing of these columns. At the same time he was increasingly concerned with the many types of fringe beliefs that had no scientific foundation, and was a founder member of PSICOPS, the organisation dedicated to the exposing and debunking of pseudoscience. Back in February last year I mentioned one of its other well-known members, the flamboyant and self-publicising James Randi. By contrast, Gardner was mild-mannered and shy, averse from public speaking and never courting publicity. He died in 2010, leaving behind him many admirers and a two-yearly convention – the ‘Gathering for Gardner‘.

Before learning more about him recently, and reading one of his books, I had known his name from the Mathematical Games column, and heard of his rigid rejection of things unscientific. I imagined some sort of skinflint atheist, probably with a hard-nosed contempt for any fanciful or imaginative leanings – however sane and unexceptionable they might be – towards what might be thought of as things of the soul.

How wrong I was. His book that I’ve recently read, The Whys of a Philosophical Scrivener, consists of a series of chapters with titles of the form ‘Why I am not a…’ and he starts by dismissing solipsism (who wouldn’t?) and various forms of relativism; it’s a little more unexpected that determinism also gets short shrift. But in fact by this stage he has already declared that

I myself am a theist (as some readers may be surprised to learn).

I was surprised, and also intrigued. Things were going in an interesting direction. But before getting to the meat of his theism he spends a good deal of time dealing with various political and economic creeds. The book was written in the mid 80s, not long before the collapse of communism, which he seems to be anticipating (Why I am not a Marxist) . But equally he has little time for Reagan or Thatcher, laying bare the vacuity of their over-simplistic political nostrums (Why I am not a Smithian).

Soon after this, however, he is striding into the longer grass of religious belief: Why I am not a Polytheist; Why I am not a Pantheist; – so what is he? The next chapter heading is a significant one: Why I do not Believe the Existence of God can be Demonstrated. This is the key, it seems to me, to Gardner’s attitude – one to which I find myself sympathetic. Near the beginning of the book we find:

My own view is that emotions are the only grounds for metaphysical leaps.

I was intrigued by the appearance of the emotions in this context: here is a man whose day job is bound up with his fascination for the powers of reason, but who is nevertheless acutely conscious of the limits of reason. He refers to himself as a ‘fideist’ – one who believes in a god purely on the basis of faith, rather than any form of demonstration, either empirical or through abstract logic. And if those won’t provide a basis for faith, what else is there but our feelings? This puts Gardner nicely at odds with the modish atheists of today, like Dawkins, who never tires of telling us that he too could believe if only the evidence were there.

But at the same time he is squarely in a religious tradition which holds that ultimate things are beyond the instruments of observation and logic that are so vital to the secular, scientific world of today. I can remember my own mother – unlike Gardner a conventional Christian believer – being very definite on that point. And it reminds me of some of the writings of Wittgenstein; Gardner does in fact refer to him, in the context of the freewill question. I’ll let him explain:

A famous section at the close of Ludwig Wittgenstein’s Tractatus Logico-Philosophicus asserts that when an answer cannot be put into words, neither can the question; that if a question can be framed at all, it is possible to answer it; and that what we cannot speak about we should consign to silence. The thesis of this chapter, although extremely simple and therefore annoying to most contemporary thinkers, is that the free-will problem cannot be solved because we do not know exactly how to put the question.

This mirrors some of my own thoughts about that particular philosophical problem – a far more slippery one than those on either side of it often claim, in my opinion (I think that may be a topic for a future post). I can add that Gardner was also on the unfashionable side of the question which came up in my previous post – that of an afterlife; and again he holds this out as a matter of faith rather than reason. He explores the philosophy of personal identity and continuity in some detail, always concluding with the sentiment ‘I do not know. Do not ask me.’ His underlying instinct seems to be that there has to something more than our bodily existence, given that our inner lives are so inexplicable from the objective point of view – so much more than our physical existence. ‘By faith, I hope and believe that you and I will not disappear for ever when we die.’ By contrast, Arthur Koestler, you may remember, wrote in his suicide note of ‘tentative hopes for a depersonalised afterlife’ – but, as it turned out, these hopes were based partly on the sort of parapsychological evidence which was anathema to Gardner.

And of course Gardner was acutely aware of another related mystery – that of consciousness, which he finds inseparable from the issue of free will:

For me, free will and consciousness are two names for the same thing. I cannot conceive of myself being self-aware without having some degree of free will… Nor can I imagine myself having free will without being conscious.

He expresses utter dissatisfaction with the approach of arch-physicalists such as Daniel Dennett, who, as he says, ‘explains consciousness by denying that it exists’. (I attempted to puncture this particular balloon in an earlier post.)

Gardner places himself squarely within the ranks of the ‘mysterians’ – a deliberately derisive label applied by their opponents to those thinkers who conclude that these matters are mysteries which are probably beyond our capacity to solve. Among their ranks is Noam Chomsky: Gardner cites a 1983 interview with the grand old man of linguistics, in which he expresses his attitude to the free will problem (scroll down to see the relevant passage).

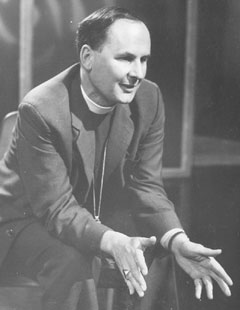

The Surgeon

And so to the surgeon of my title, and if you’ve read one of my other blog posts you will already have met him – he’s a neurosurgeon named Henry Marsh, and I wrote a post based on a review of his book Do No Harm. Well, now I’ve read the book, and found it as impressive and moving as the review suggested. Unlike many in his profession, Marsh is a deeply humble man who is disarmingly honest in his account about the emotional impact of the work he does. He is simultaneously compelled towards, and fearful of, the enormous power of the neurosurgeon both to save and to destroy. His narrative swings between tragedy and elation, by way of high farce when he describes some of the more ill-conceived management ‘initiatives’ at his hospital.

The interesting point of comparison with Gardner is that Marsh – a man who daily manipulates what we might call physical mind-stuff – the brain itself – is also awed and mystified by its powers:

There are one hundred billion nerve cells in our brains. Does each one have a fragment of consciousness within it? How many nerve cells do we require to be conscious or to feel pain? Or does consciousness and thought reside in the electrochemical impulses that join these billions of cells together? Is a snail aware? Does it feel pain when you crush it underfoot? Nobody knows.

The same sense of mystery and wonder as Gardner’s; but approached from a different perspective:

Neuroscience tells us that it is highly improbable that we have souls, as everything we think and feel is no more or no less than the electrochemical chatter of our nerve cells… Many people deeply resent this view of things, which not only deprives us of life after death but also seems to downgrade thought to mere electrochemistry and reduces us to mere automata, to machines. Such people are profoundly mistaken, since what it really does is upgrade matter into something infinitely mysterious that we do not understand.

This of course is the perspective of a practical man – one who is emphatically working at the coal face of neurology, and far more familiar with the actual material of brain tissue than armchair speculators like me. While I was reading his book, although deeply impressed by this man’s humanity and integrity, what disrespectfully came to mind was a piece of irreverent humour once told to me by a director of a small company I used to work for which was closely connected to the medical industry. It was a sort of a handy cut-out-and-keep guide to the different types of medical practitioner:

Surgeons do everything and know nothing. Physicians know everything and do nothing. Psychiatrists know nothing and do nothing. Pathologists know everything and do everything – but the patient’s dead, so it’s too late.

Grossly unfair to all to all of them, of course, but nonetheless funny, and perhaps containing a certain grain of truth. Marsh, belonging to the first category, perhaps embodies some of the aversion from dry theory that this caricature hints at: what matters to him ultimately, as a surgeon, is the sheer down-to-earth physicality of his work, guided by the gut instincts of his humanity. We hear from him about some members of his profession who seem aloof from the enormity of the dangers it embodies, and seem able to proceed calmly and objectively with what he sees almost as the detachment of the psychopath.

Common ground

What Marsh and Gardner seem to have in common is the instinct that dry, objective reasoning only takes you so far. Both trust the power of their own emotions, and their sense of awe. Both, I feel, are attempting to articulate the same insight, but from widely differing standpoints.

Two passages, one from each book, seem to crystallize both the similarities and differences between the respective approaches of the two men, both of whom seem to me admirably sane and perceptive, if radically divergent in many respects. First Gardner, emphasising in a Wittgensteinian way how describing how things appear to be is perhaps a more useful activity than attempting to pursue any ultimate reasons:

There is a road that joins the empirical knowledge of science with the formal knowledge of logic and mathematics. No road connects rational knowledge with the affirmations of the heart. On this point fideists are in complete agreement. It is one of the reasons why a fideist, Christian or otherwise, can admire the writings of logical empiricists more than the writings of philosophers who struggle to defend spurious metaphysical arguments.

And now Marsh – mystified, as we have seen, as to how the brain-stuff he manipulates daily can be the seat of all experience – having a go at reading a little philosophy in the spare time between sessions in the operating theatre:

As a practical brain surgeon I have always found the philosophy of the so-called ‘Mind-Brain Problem’ confusing and ultimately a waste of time. It has never seemed a problem to me, only a source of awe, amazement and profound surprise that my consciousness, my very sense of self, the self which feels as free as air, which was trying to read the book but instead was watching the clouds through the high windows, the self which is now writing these words, is in fact the electrochemical chatter of one hundred billion nerve cells. The author of the book appeared equally amazed by the ‘Mind-Brain Problem’, but as I started to read his list of theories – functionalism, epiphenomenalism, emergent materialism, dualistic interactionism or was it interactionistic dualism? – I quickly drifted off to sleep, waiting for the nurse to come and wake me, telling me it was time to return to the theatre and start operating on the old man’s brain.

I couldn’t help noticing that these two men – one unconventionally religious and the other not religious at all – seem between them to embody those twin traditional pillars of the religious life: faith and works.