I have let rather too much time elapse since my last post. What are my excuses? Well, I mentioned in one post how helpful my commuter train journeys were in encouraging the right mood for blog-writing – and since retiring such journeys are few and far between. To get on the train every time I felt a blog-post coming on would not be a very pension-friendly approach, given current fares. My other excuse is the endless number of tasks around the house and garden that have been neglected until now. At least we are now starting to create a more attractive garden, and recently took delivery of a summerhouse: I am hoping that this could become another blog-friendly setting.

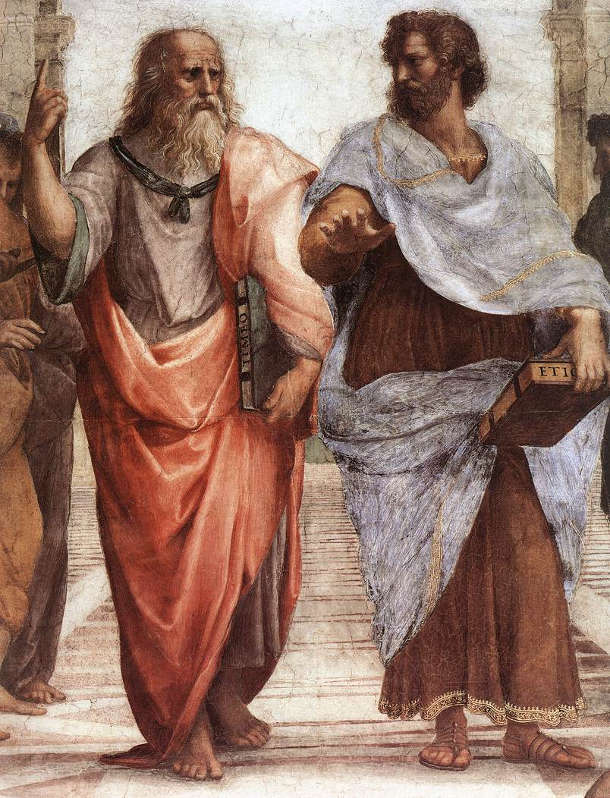

tvtropes.org

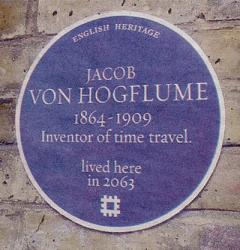

But since a lapse of time is my starting point, I could get back in by thinking again about the nature of time. Four years back (Right Now, 23/3/13) I speculated on the issue of why we experience a subjective ‘now’ which doesn’t seem to have a place in objective physical science. Since then I’ve come across various ruminations on the existence or non-existence of time as a real, out-there, component of the world’s fabric. I might have more to say about this in the, er, future – but what appeals to me right now is the notion of time travel. Mainly because I would welcome a chance to deal with my guilty feelings by going back in time and plugging the long gap in this blog over the past months.

I recently heard about an actual time travel experiment, carried out by no less a person that Stephen Hawking. In 2009, he held a party for time travellers. What marked it out as such was that he sent out the invitations after the party took place. I don’t know exactly who was invited; but, needless to say, the canapes remained uneaten and the champagne undrunk. I can’t help feeling that if I’d tried this, and no one had turned up, my disappointment would rather destroy any motivation to send out the invitations afterwards. Should I have sent them anyway, finally to prove time travel impossible? I can’t help feeling that I’d want to sit on my hands, and leave the possibility open for others to explore. But the converse of this is the thought that, if the time travellers had turned up, I would be honour-bound to despatch those invites; the alternative would be some kind of universe-warping paradox. In that case I’d be tempted to try it and see what happened.

Elsewhere, in the same vein, Hawking has remarked that the impossibility of time travel into the past is demonstrated by the fact that we are not invaded by hordes of tourists from the future. But there is one rather more chilling explanation for their absence: namely that the time travel is theoretically possible, but we have no future in which to invent it. Since last year that unfortunately looks a little more likely, given the current occupant of the White House. That such a president is possible makes me wonder whether the universe is already a bit warped.

Should you wish to escape this troublesome present and escape into a different future, whatever it might hold, it can of course be done. As Einstein famously showed in 1905, it’s just a matter of inventing for yourself a spaceship that can accelerate to nearly the speed of light and taking a round trip in it. And of course this isn’t entirely science fiction: astronauts and satellites – even airliners – regularly take trips of microseconds or so into the future; and indeed our now familiar satnav devices wouldn’t work if this effect weren’t taken into account.

But the problem of course arises if you find the future you’ve travelled to isn’t one you like. (Trump, or some nepotic Trumpling, as world president? Nuclear disaster? Both of these?) Whether you can get back again by travelling backward in time is not a question that’s really been settled. Indeed, it’s the ability to get at the past that raises all the paradoxes – most famously what would happen if you killed your grandparents or stopped them getting together.

Marty McFly with his teenage mother

This is a furrow well-ploughed in science fiction, of course. You may remember the Marty McFly character in the film Back to the Future, who embarks on a visit to the past enabled by his mad scientist friend. It’s one way of escaping from his dysfunctional, feckless parents, but having travelled back a generation in time he finds himself in being romantically approached by his teenage mother. He manages eventually to redirect her towards his young father, but on returning to the present finds his parents transformed into an impossibly hip and successful couple.

Then there’s Ray Bradbury’s story A Sound of Thunder, where tourists can return to hunt dinosaurs – but only those which were established to have been about to die in any case, and any bullets must then be removed from their bodies. As a further precaution, the would-be hunters are kept away from the ground by a levitating path, to prevent any other paradox-inducing changes to the past. One bolshie traveller breaks the rules however, reaches the ground, and ends up with a crushed butterfly on the sole of his boot. On returning to the present he finds that the language is subtly different, and that the man who had been the defeated fascist candidate for president has now won the election. (So, thinking of my earlier remarks, could some prehistoric butterfly crusher, yet to embark on his journey, be responsible for the current world order?)

My favourite paradox is the one portrayed in a story called The Muse by Anthony Burgess, in which – to leave a lot out – a time travelling literary researcher manages to meet William Shakespeare and question him on his work. Shakespeare’s eye alights on the traveller’s copy of the complete works, which he peruses and makes off with, intending to mine it for ideas. This seems like the ideal solution for struggling blog-writers like me, given that, having travelled forward in time and copied what I’d written on to a flash drive, I could return to the present and paste it in here. Much easier.

But these thoughts put me in mind of a more philosophical issue with time which has always fascinated me – namely whether it’s reversible. We know how to travel forward in time; however when it comes to travelling backward there are various theories as to how it might, in theory, be done, but no-one is very sure. Does this indicate a fundamental asymmetry in the way time works? Of course this is a question that has been examined in much greater detail in another context: the second law of thermodynamics, we are told, says it all.

Let’s just review those ideas. Think of running a film in reverse. Might it show anything that could never happen in real, forward time? Well of course if it were some sort of space film which showed planets orbiting the sun, or a satellite around the earth, then either direction is possible. But, back on earth, think of all those people you’d see walking backwards. Well, on the face of it, people can walk backwards, so what’s the problem? Well, here’s one of many that I could think of: imagine that one such person is enjoying a backward country walk on a path through the woods. As she approaches a protruding branch from a sapling beside the path, the branch suddenly whips sideways towards her as if to anticipate her passing, and then, laying itself against her body, unbends itself as she backward-walks by, and has then returned to its rest position as she recedes. Possible? Obviously not. But is it?

I’m going to argue against the idea that there is a fundamental ‘arrow of time’, and that despite the evident truth of the laws of thermodynamics and the irresistible tendency we observe toward increasing disorder, or entropy, there’s nothing ultimately irreversible about physical processes. I’ve deliberately chosen an example which seems to make my case harder to maintain, to see if I can explain my way out of it. You will have had the experience of walking by a branch which projects across your path, and noticing how your body bends it forwards as you pass, and seeing it spring back to its former position as you continue on. Could we envisage a sequence of events in the real world where all this happened in reverse?

Before answering that I’m going to look at a more familiar type of example. I remember being impressed many years ago by an example of the type of film I mentioned, illustrating the idea of entropy. It showed someone holding a cup of tea, and then letting go of it, with the expected results. Then the film was reversed. The mess of spilt tea and broken china on the floor drew itself together, and as the china pieces reassembled themselves into a complete cup and saucer, the tea obediently gathered itself back into the cup. As this process completed the whole assembly launched itself from the floor and back into the hands of its owner.

Obviously, that second part of the sequence would never happen in the real world. It’s an example of how, left to itself, the physical world will always progress to a state of greater disorder, or entropy. We can even express the degree of entropy mathematically, using information theory. Case closed, then – apart, perhaps, from biological evolution? And even then it can be shown that if some process – like the evolution of a more sophisticated organism – decreases entropy, it will always be balanced by a greater increase elsewhere; and so the universe’s total amount of entropy increases. The same applies to our own attempts to impose order on the world.

So how could I possibly plead for reversibility of time? Well, I tend to think that this apparent ‘arrow’ is a function of our point of view as limited creatures, and our very partial perception of the universe. I would ask you to imagine, for a moment, some far more privileged being – some sort of god, if you like – who is able to track all the universe’s individual particles and fields, and what they are up to. Should this prodigy turn her attention to our humble cup of tea, what she saw, would I think, be very different from the scene as experienced through our own senses. From her perspective, the clean lines of the china cup which we see would become less defined – lost in a turmoil of vibrating molecules, themselves constantly undergoing physical and chemical change. The distinction between the shattered cup on the floor and the unbroken one in the drinker’s hands would be less clear.

What I’m getting at is the fact that what we think of as ‘order’ in our world is an arrangement that seems significant only from one particular point of view determined by the scale and functionality of our senses: the arrangement we think of as ‘order’ floats like an unstable mirage in a sea of chaos. As a very rough analogy, think of those patterns of coloured dots used to detect colour blindness. You can see the number in the one I’ve included only if your retinal cells function in a certain way; otherwise all you’d see would be random dots.

What I’m getting at is the fact that what we think of as ‘order’ in our world is an arrangement that seems significant only from one particular point of view determined by the scale and functionality of our senses: the arrangement we think of as ‘order’ floats like an unstable mirage in a sea of chaos. As a very rough analogy, think of those patterns of coloured dots used to detect colour blindness. You can see the number in the one I’ve included only if your retinal cells function in a certain way; otherwise all you’d see would be random dots.

And, in addition to all this, think of the many arrangements which (to us) might appear to have ‘order’ – all the possible drinks in the cup, all the possible cup designs – etc, etc. But compared to all the ‘disordered’ arrangements of smashed china, splattered liquid and so forth, the number of potential configurations which would appeal to us as being ‘ordered’ is truly infinitesimal. So it follows that the likelihood of moving from a state we regard as ‘disordered’ to one of ‘order’ is unimaginably slim; but not, in principle, impossible.

So let’s imagine that one of these one-in-a-squillion chances actually comes off. There’s the smashed cup and mess of tea on the floor. It’s embedded in a maze of vibrating molecules making up the floor, the surrounding air, and so on. And, in this case it so happens that the molecular impacts between the elements of the cup and the tea, and their surroundings combine so as to nudge them all back into their ‘ordered’ configuration, and boost them back off the floor and back into the hands of the somewhat mystified drinker.

Yes, the energy is there to make that happen – it just has to come together in exactly the correct, fantastically unlikely way. I don’t know how to calculate the improbability of this, but I should imagine that to see it happen we would need to do continual trials for a time period which is some vast multiple of the age of the universe. (Think monkeys typing the works of Shakespeare, and then multiply by some large number.) In other words, of course, it just doesn’t happen in practice.

But, looked at another way, such unlikely things do happen. Think of when we originally dropped the cup, and ended up with some sort of mess on the floor – that is, out of the myriad of other possible messes that could have been created, had the cup been dropped at a slightly different angle, the floor had been dirtier, the weather had been different – and so on. How likely is that exact, particular configuration of mess that we ended up with? Fantastically unlikely, of course – but it happened. We’d never in practice be able to produce it a second time.

So of all these innumerable configurations of matter – whether or not they are one of the tiny minority that seem to us ‘ordered’ – one of them happens with each of the sorts of event we’ve been considering. The universe at large is indifferent to our notion of ‘order’, and at each juncture throws up some random selection of the unthinkably large number of possibilities. It’s just that these ordered states are so few in number compared to the disordered ones that they never in practice come about spontaneously, but only when we deliberately foster them into being, by doing such things as manufacturing teacups, or making tea.

Let’s return, then, to the branch that our walker brushes past on the woodland footpath, and give that a similar treatment. It’s a bit simpler, if anything: we just require the astounding coincidence that, as the backwards walker approaches the branch, the random Brownian motion of an unimaginably large number of air molecules just happen to combine to give the branch a series of rhythmic, increasing nudges. It appears to oscillate with increasing amplitude until one final nudge lays it against the walker’s body just as she passes. Not convinced? Well, this is just one of the truly countless possible histories of the movement of a vast number of air molecules – one which has a consequence we can see.

Remember that the original Robert Brown, of Brownian motion fame, did see random movements of pollen grains in water, and since it didn’t occur to him that the water molecules were responsible for this; he thought it was a property of the pollen grains. Should we happen to witness such an astronomically unlikely movement of the tree, we would suspect some mysterious bewitchment of the tree itself, rather than one specific and improbably combination of air molecule movements.

You’ll remember that I was earlier reflecting that we know how to travel forwards in time, but that backward time travel is more problematic. So doesn’t this indicate another asymmetry – another evidence of an arrow of time? Well I think the right way of thinking about this emerges when we are reminded that this very possibility of time travel was a consequence of a theory called ‘relativity’. So think relative. We know how to move forward in time relative to other objects in our vicinity. Equally, we know how they could move forward in time relative to us. Which of course means that we’d be moving backward relative to them. No asymmetry there.

Maybe the one asymmetry in time which can’t be analysed a way is our own subjective experience of moving constantly from a ‘past’ into a ‘future’ – as defined by our subjective ‘now’. But, as I was pointing out three years ago, this seems to be more a property of ourselves as experiencing creatures, rather than of the objective universe ‘out there’.

I’ll leave you with one more apparent asymmetry. If processes are reversible in time, why do we only have records of the past, and not records of the future? Well, I’ve gone on long enough, so in the best tradition of lazy writers, I will leave that as an exercise for the reader.